30,000 BCE: From the firelit cave paintings of Lascaux to the birth of painting, architecture, and other arts, we have been attempting to recreate both the world around us and our imagination within.

4thC BCE: Zhuangzi dreams he is a butterfly, but questions if he is a butterfly dreaming he is a man. Are dreams also simulations?

Once Zhuang Zhou dreamed he was a butterfly, a fluttering butterfly. What fun he had, doing as he pleased! He did not know he was Zhou. Suddenly he woke up and found himself to be Zhou. He did not know whether Zhou had dreamed he was a butterfly or a butterfly had dreamed he was Zhou. Between Zhou and the butterfly there must be some distinction. This is what is meant by the transformation of things. During our dreams we do not know we are dreaming. We may even dream of interpreting a dream. Only on waking do we know it was a dream. Only after the great awakening will we realize that this is the great dream.

~380 BCE: Plato likens the uneducated to prisoners in a cave unable to turn their heads. A fire behind them casts shadows of puppets, also behind them, such that all they can see are the puppets' shadows on the wall in front. Such prisoners mistake appearance for reality.

The allegory is intended to show that the names we give for things, to allow us as prisoners to converse about what we see, are in fact names for things that we cannot see, but only grasp with the mind. That is, the real meaning of the words we use is not something that we can ever see with our senses alone. But we can only know this by being liberated from the illusion of the shadows.

1637-1672: René Descartes invents conventions for analytic geometry and algebraic approaches to geometry; for which reason we still describe space in X, Y and Z axes and call this "Cartesian" coordinates. He believed that algebra was a method to automate reasoning.

Descartes also uses methodological skepticism to question his existence and perception, and whether he is dreaming or things are externally real. Influenced by the mechanical automatons of his time, he draws attention to the problem of the connection between body and mind, inadvertently launching a dualism that dominates Western thought thenceforth and remains an influence over and problem of VR.

"VR opens the door to what Jaron Lanier (who coined the term virtual reality in the 1980s) calls “post-symbolic communication”: No longer are we limited to communicating via sequences of symbols represented by audible vibrations of our vocal chords, or produced by our fingers pressing on a series of keys or, more recently, a flat piece of glass. Instead, you experience my dream directly, without having to interpret long strings of verbal or written symbols... The medium, the place where those stories will unfold, exists within our consciousness. We’ll find ourselves having passed through our long-held, precious frames to live within those stories. And we’ll carry the memory of those stories not as content that we once consumed, but as times and spaces we existed within." - source

1800's: The popular wave of massive-scale panorama paintings, often with dedicated buildings, usually depicting landscapes and/or historic events.

At the same time, the first attempts to capture permanent images from camera obscura (themselves inspired by caves...) through chemical means marks the birth of photography.

1838: Sir Charles Wheatstone invents stereoscopic photography

1885/1935: L'Arrivée d'un Train

The train moving directly towards the camera, shot in 1895, was said to have terrified spectators at the first screening, a claim that has been called an urban legend. What many film histories leave out is that the Lumière Brothers were trying to achieve a 3D image even prior to this first-ever public exhibition of motion pictures, and later re-shot the film in stereoscopic 3D, first screened in 1935. Given the contradictory accounts that plague early cinema and pre-cinema accounts, it's plausible that early cinema historians conflated the audience reactions of the 2D and 3D screenings of L'Arrivée d'un Train.

1901: L. Frank Baum, an author, first mentions the idea of an electronic display/spectacles that overlays data onto real life (in this case 'people'), it is named a 'character marker'.

1935: Stanley G. Weinbaum's short story "Pygmalion's Spectacles" describes a goggle-based virtual reality system with holographic recording of fictional experiences, including smell and touch: "You are in the story, you speak to the shadows (characters) and they reply, and instead of being on a screen, the story is all about you, and you are in it."

1939: The ViewMaster stereoscopic device is launched.

1943: Patent filed for a head-mounted stereo TV.

1929-1950s: Link Trainer, a mechanical flight simulator with motion simulation, to be used by over 500,000 pilots.

1950s-60s: The "golden era" of 3D cinema.

1957–62: Morton Heilig, a cinematographer, creates and patents a mechanical simulator called Sensorama with visuals, sound, vibration, and smell.

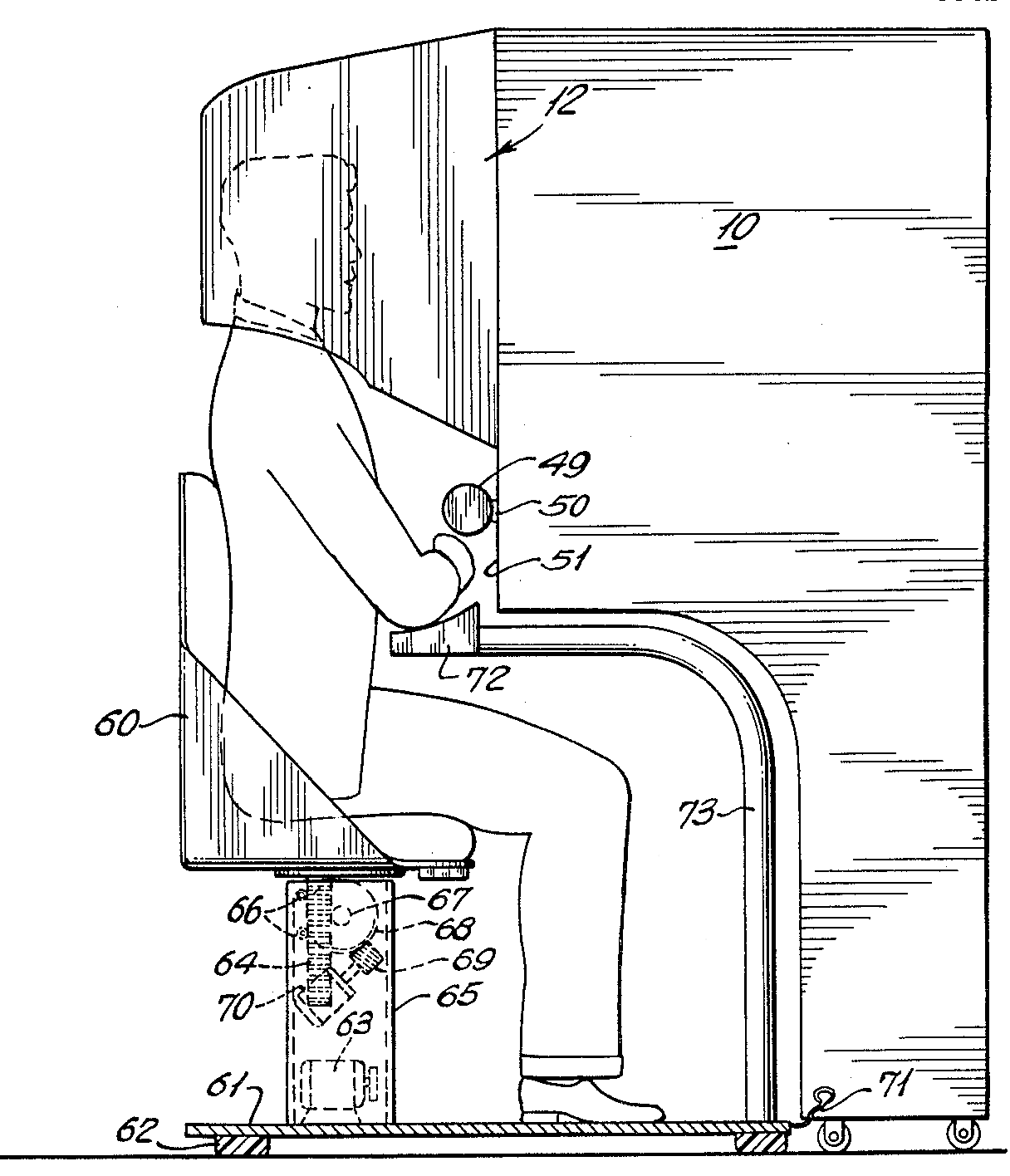

Heilig later (1960) filed a patent for a multisensory HMD:

"When anything new comes along, everyone, like a child discovering the world, thinks that they've invented it, but you scratch a little and you find a caveman scratching on a wall is creating virtual reality in a sense." - Morton Heilig

1961: Philco Headsight is the first HMD, used for remote camera viewing (CCTV), including head orientation tracking.

1963: Ivan Sutherland's Sketchpad, one of the first interactive graphics program.

Hugo Gernsback (of "Hugo Awards" fame), wearing his TV Glasses in a 1963 Life magazine shoot:

1964: New York inventor and holographer Gene Dolgoff, who is also the inventor of the digital projector, creates a holography laboratory. Dolgoff's obsession with holography included theories of "matter holograms", the holographic nature of the universe, and the holographic nature of the human brain.

1965: Ivan Sutherland pens The Ultimate Display. Ivan E Sutherland, 1965, inspiring everything from the Holodeck to the Matrix.

"The ultimate display would, of course, be a room within which the computer can control the existence of matter. A chair displayed in such a room would be good enough to sit in. Handcuffs displayed in such a room would be confining, and a bullet displayed in such a room would be fatal. With appropriate programming such a display could literally be the Wonderland into which Alice walked."

1968: Ivan Sutherland's Sword of Damocles, widely considered to be the first virtual reality (VR) and augmented reality (AR) head-mounted display (HMD) system. DARPA.

The next twenty years see slow but non-stop development of VR technologies largely within military, industry, and science research institutions, with a slow infiltration into popular culture.

1974: The Holodeck concept appears in Star Trek: the Animated Series, and reappears in 1987 in Star Trek: The Next Generation.

1975: Myron Krueger creates Videoplace to allow users to interact with virtual objects for the first time. Book "Artificial Reality" articulates an artform whose primary material is real-time interaction itself.

1977: Star Wars features a hologram (Leia's message for Kenobi) and some of the first widely-seen 3D computer graphics in film (the Death Star plans).

1978: Aspen Movie Map -- a proto Streetview, interactive via laserdisc, that also had a polygonal mode.

1979: LEEP HMD with lenses designed for very wide field of view.

1980: Steve Mann creates the first wearable computer, a computer vision system with text and graphical overlays on a photographically mediated reality.

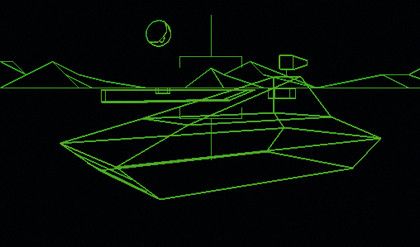

Battlezone is the first big 3D vector graphics success in arcade games. Battlezone was thought so realistic that the US Army used it to train tank gunners.

1982: Atari founds a VR research lab

Tron movie

1983: Brainstorm movie.

1984: William Gibson writes Neuromancer, bringing wide acclaim to the cyberpunk genre.

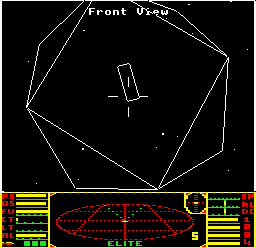

Elite, an open world space trading video game, published by Acornsoft for the BBC Micro and Acorn Electron computers, featuring revolutionary 3D graphics

1985: Jaron Lanier (formerly of the Atari lab) coins the phrase Virtual Reality and creates the first commercial business ("VPL") around virtual worlds.

VR at NASA:

1989: Shadowrun desktop role-playing game in a near-future cyberpunk + VR world

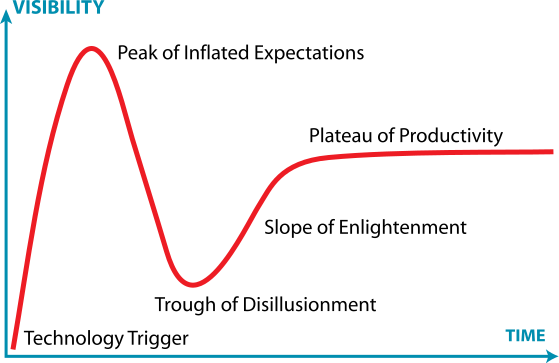

The 90's saw a wave of public interest and hype in VR, which as it grew became often conflated with cybernetics, AI, computer graphics in general, the nascent internet, etc. as cyberspace.

1991: Virtuality company launches with a new multiplayer hardware prototype in several countries -- but at $73,000 per unit! Sega also launches a VR headset for their console.

EVL in Chicago launches the first cubic CAVE VR system. Later commercialized by Mechdyne, WorldViz and others, still actively installing new systems in research labs around the world today.

Retinal display developed, scanning images onto retina, commercialized by Microvision.

Computer Gaming World magazine predicted "Affordable VR by 1994"

ABC Primetime covers the VR scene (from vrtifacts.com):

1992: Neal Stephenson writes Snow Crash

Lawnmower Man movie.

Sega Virtua Racing, and Virtua Fighter (1993) popularized polygonal 3D games.

1994: The first version of Virtual Reality Modeling Language (VRML), a standard for sharing interactive 3D vector graphics on the web, and by 1997 several 3D chat environments exist.

1994: Topological Slide. Michael Scroggins & Stewart Dickson.

Paper: Absolute Animation and Immersive VR.

1995: Maurice Benayoun creates a VR artwork Tunnel under the Atlantic connecting the Pompidou Centre in Paris and the Museum of Contemporary Art in Montreal with 3D modeling, video chat, spatialized sound, and AI.

Strange Days and Johnny Mnemonic movies.

A mini documentary:

The same year was also identified as the 'death of VR'. Nintendo releases VirtualBoy for US$ 180, and discontinues it just six months later. ("Nail in the coffin for 90's VR") A survey by Computerworld magazine in 2007 listed VR as the 7th biggest technology flop in history.

What went wrong?

1996: Quake pioneers play over the Internet first-person shooters.

3dfx Interactive released the Voodoo chipset, leading to the first affordable 3D accelerator cards for personal computers. Within a few years dedicated 3D graphics processing unit cards (GPUs) become essential for most video games, and GPU performance wars rapidly increase real-time 3D rendering capabilities at consumer price levels.

Meanwhile, although VR was still capturing some SF attention and slowly being rediscovered through the web, VR develops mainly in research labs, and steadily continues to grow in big-budget industrial, science & health research, as well as military training, outside the media radar.

"VR was used to visualize oil fields and to visualize machinery to extract oil more efficiently from old fields. Similar things happened in medicine. We understand more about large molecules, we understand more about how the body heals from surgery through VR simulations." - Whatever happened to VR -- interview with Jaron Lainer (2007)

1999: The Matrix and eXistenZ movies.

2001: Grand Theft Auto III released, popularizing 3D open world games with a non-linear style of gameplay

2005: The AlloSphere

Over this period VR also gradually begins to appear on the web.

1999: Entrepreneur Philip Rosedale forms Linden Lab to develop hardware for 360 degree VR, but this soon transforms into a platform for 3D socializing, launching SecondLife in 2003.E.g.

2007: Google Streetview launched.

VR goes into the garage, then goes mainstream again

2009: A teenage Palmer Luckey announces on a BBS post his home-made Oculus "Rift" HMD., and works on it in his parent's garage over the next couple of years.

2012: John Carmack (lead programmer of Doom, Quake, and many other pioneering 3D games) introduces a duct taped head-mounted display based on Luckey's prototype at the Electronic Entertainment Expo. Palmer's company, Oculus VR, launches a Kickstarter campaign to fund the development of the Rift. It is phenomenally successful, raising US$2.4 million for the development of the Rift.

2013: First Oculus Rift developer kit (DK1) ships, for $300. Developer kits are released to give developers a chance to develop content in time for the Rift's release; these have also been purchased by many virtual reality enthusiasts for general usage.

Althrough Luckey mainly considered the HMD a way to play FPS shooters, artists jump on the chance to radically show what else VR could be. A couple of examples from 2013:

Gender Swap is an experiment that uses themachinetobeanother.org/ system as a platform for embodiment experience (a neuroscience technique in which users can feel themselves like if they were in a different body). In order to create the brain ilusion we use the immersive Head Mounted Display Oculus Rift, and first-person cameras. To create this perception, both users have to synchronize their movements. If one does not correspond to the movement of the other, the embodiment experience does not work. It means that both users have to constantly agree on every movement they make. Through out this experiment, we aim to investigate issues like Gender Identity, Queer Theory, feminist technoscience, Intimacy and Mutual Respect.

Also this year: Google announces an open beta test of its Google Glass augmented reality glasses.

2015: SightLine: The Chair, by Frooxius

"This experience is based off the gaze-direction mechanics of the award winning prototype "SightLine", originally developed for the 2013 VR Jam sponsored by Oculus VR and IndieCade."

More than 100,000 Oculus DK2's ($350) had shipped. Oculus VR is acquired by Facebook for $2 billion.

Microsoft announces HoloLens augmented reality headset.

HTC partners with Valve Corporation to develop the HTC Vive headset and controllers, released early 2016.

2016 Announced as "the year of VR"

2017-2018

Industry focus has shifted from hardware to content problems:

Michael Abrash, Chief Scientist, Oculus: "The future of VR lies in the unique experiences that get created in software, and if I knew what those would be, even in broad outline, I would be very happy."

There's huge investment, affordable platforms, ready authoring tools and delivery networks, and proven use cases.

Augmented/Virtual Reality revenue forecast revised to hit $120 billion by 2020

2019-2021

Things slow down a little -- talk of "VR Winter" -- but a steady rollout of new HMDs, as well as phone-based AR, WebVR / WebXR, the huge quantities of the standalone Oculus Quest sold, gradually AAA games, HMDs in workplaces, etc.

(See also The Rise and Fall and Rise of Virtual Reality and Introduction to Virtual Reality)

2022-2024

Metaverse hype peaks - Oculus/Facebook renamed Meta - and crashes amidst crypto scandals.

Multi-user sandbox games in VR, and in arena-style physical venues

New device successes signal steps toward XR with video pass-through -- Quest, Apple Vision Pro, ...

Film/animation depends on a perceptual illusion -- persistence of vision.

https://youtu.be/YismwdgMIRc?si=SpklCRNzvUtFrbLq

This is easier to understand via animation: around 12-15 frames per second is enough for the brain to interpret as movement, but only when sequent images are plausible enough to be fused. Plausibility in this case is a function of neurophysiology and cognition. (Of course, cinema also depends on other perceptual quirks, such as the brain's acceptance of cuts in editing even though nothing like a cut exists in real life, the suspension of disbelief through non-human perspectives, and so forth.)

https://youtu.be/KyEcMEqeKpo?si=i2i5aFBL65rQ3ZCC&t=1587

https://youtu.be/zXqIKlCzqL0?si=iBHxF-VK79Ib50co

Stereoscopic 3D (S3D) builds on another perceptual illusion. Presenting to each eye a viewpoint slightly displaced laterally emulates the parallax effect -- one of the most powerful visual cues to impart depth (distance). Again, this is dependent on the human body, and also requires very careful alignment. Some, though few, people experience discomfort due to discrepancies between the stereoscopic 3D depth cue and others that are lacking, such as vergence.

The crucial addition for VR is head tracking, which means we update the image displayed according to our head pose. Here's a very basic example of "fishtank VR", for example:

With full head-mounted display or CAVE style VR, the trick is to present a coherent image regardless of what direction we face, resulting in the impression that the image entirely surrounds us -- it becomes our sensorium.

The illusion is far more effective with position tracking: matching the lateral movements of the head as well as its orientation, so that you can look around, over and under things, and generally benefit from more kinds of depth cues we experience in real life, as well as further reducing the chance of nausea.

This illusion however breaks down if the delay between movement and image (motion-to-photon) is greater than a couple of handfuls of milliseconds, which underlies the need for high frame rates (90fps for current desktop models) to avoid nauseating "judder".

Surround sound in audio has a very long history of development. The currently-favored technique for spatial audio in VR is called Ambisonics, and this was invented in the mid-1970s by a small group of British academics including Michael Gerzon. Ambisonics essentially represents the direction from which sounds reach us, and this can be reproduced over an array of loudspeakers (as we have in the lab), or over over head-tracked headhpones in VR and using HRTF profiles that model how we spatially localize sounds using our ears.

This models the direction of sound, but not the distance. To model distance typically we use a combination of cues including attenuation (sounds get quieter as the are further away), filtering (sounds get less bright when further away, while very close sounds have a lot more bass energy), etc. In addition we may model the spatial reverberation of a room or space using signal processing effects (reverbs).

VR & XR greatly benefit from wide field of view, high resolution, and other factors of immersion. The result is that the viewer no longer perceives an image plane worn in front of the eyes, and instead perceives oneself being present in another world. Instead of an image moving in front of your eyes, the world appears as a fixed space in which you are moving your own head.

(This also means that stereoscopic content can be as close as your nose, something that 3D cinema cannot normally achieve because of the limits of the frame).

The combination of all the above can create a compelling immersive experience. Together with the qualities of content, this leads to the evocation of presence, the sense of actually being-there in the world; a continuous illusion of non-mediation.

But aside from presence, VR can also maximize interaction (the extent to which a user can manipulate objects and the environment of the system) and autonomy (the system's ability to receive and react to external stimuli, such as actions performed by a user). In that regard, convincing experiences created by real-time simulations that support agency -- the ability to take meaningful action in a world and discover meaningful consequences -- are just as essential.

However, the agency, presence, and immersion of VR can go catastrophically wrong, leading to nausea and "simulator sickness"

Simulation Sickness is a syndrome, which can result in eyestrain, headaches, problems standing up (postural instability), sweating, disorientation, vertigo, loss of colour to the skin, nausea, and - the most famous effect - vomiting. It is similar in effects to motion sickness, although technically a different thing. Simulation sickness can occur during simulator or VR equipment use and can sometimes persist for hours afterwards... If VR experiences ignore fundamental best practices, they can lead to simulator sickness—a combination of symptoms clustered around eyestrain, disorientation, and nausea. - Article on Gamasutra - by Ben Lewis-Evans on 04/04/14

Simulator sickness involves three kinds of issues:

Which is to say, virtual worlds can be dangerous! See this 1996 NBC special:

In fact simulator sickness has been known about since the earliest flight simulators of the 1950's, but is still not fully understood. It is clearly triggered by "cue conflicts", whereby what some parts of the visual system are reporting does not match what other sensory components (such as proprioceptive systems) are reporting.

(Some researchers hope to alleviate VR nausea by galvanic vestibular stimulation, for example the Mayo Clinic, but as yet this hasn't convinced the industry. See also this.)

Some people are far more or less susceptible than others. It generally affects younger people less, and tends to reduce with increased exposure (getting your "VR legs"). People with a history of MS, alcohol/drug abuse, etc. also tend to be more susceptible.

Around 5% of all individuals will never acclimate regardless how much they try to build a resistance to it meaning there is a confirmed minority of individuals who will never be able to us Virtual Reality as a mainstream product over their lifetime. - Sim Sickness guide on Oculus forums

Since nausea/sim-sickness remains one of the greatest risks to virtual reality's success, it is essential to consider in the design of an experience.

To some extent this is a hardware problem -- and recent advances in VR hardware and drivers have come a long way to minimize the risk. However this still very deeply affects how we design our content, and is important to understand.

Latency is how long it takes for a message to transmit. Motion to photon latency measures how long it takes for a change in head rotation to be reflected in a change in the image perceived. It should be consistently under 20 milliseconds to avoid nausea. Failing to do so can result in sluggish or sloppy motion tracking, in which the world 'swims' around you. Even occasional hiccups will be experienced as a disturbing "judder" that is never experienced in normal life. The Oculus and Vive hardware now run at 90 fps and their drivers do some tricks to help keep latency down, but it also depends crucially on the content and quality of the software.

Anything that can potentially interrupt or slow down rendering, or delay the motion-to-photon pathway, has to be avoided to prevent nausea. The need for low-latency and high-framerate is one of the reasons why certain visual details and effects common in video games are eschewed in VR. When the world surrounds in you stereoscopy, geometry is often more important than screen-based post-processing. In particular, many effects popular in games are actually rendered across several frames -- this is simply not viable for VR.

A related issue is image persistence: a low-persistence image has a longer black interval between presenting frames, which reduces the smear/blur/ghosting when moving your head. This is mainly a display screen technology issue and largely resolved in current generation hardware.

The other major cause of nausea is motion cue conflicts, in which the movement portrayed by the images presented is not consistent with real motion of the body (or with an expected motion). This is almost the inverse of motion sickness, and appears to trigger a response in the body consistent with an assumption of being poisoned. Modern life has also brought to us another real-world parallel:

Imagine you’re on a train and look out the window to see a train leaving the station. As that train begins to move it creates an illusion of movement in your own mind and your brain’s likely conclusion is that the train you are on is actually moving in the opposite direction, that illusion is called “Vection.” Vection occurs when a portion of what you can see moves, and is one of the things that can lead to motion sickness in VR." - 5 ways to reduce motion sickness in VR

There are two important categories of motion cue conflicts to consider:

Any change of velocity (or rotational velocity, i.e. turning) is an acceleration, which imparts a physical force on the body detected primarily via the vestibular system. If such changes occur in the virtual world but are not mirrored in physical vestibular response (e.g. by navigating with a joystick rather than on a treadmill) nausea can very rapidly ensue. One of the most difficult outcomes is that moving a person's perspective through virtual space, without them moving in physical space, is a cue-conflict that can cause significant nausea. Even turning the camera around (rather than looking over your shoulder) is nausea-inducing -- described as "VR poison" by John Carmack.

"Oculus has even devised a new “comfort” rating system, which divides its launch lineup of games into “comfortable,” “moderate,” and “intense” categories." - Virtual Reality’s Locomotion Problem

But preventing an immersant from exploring a virtual world sacrifices one of the most compelling potentials of VR! Some say that the locomotion question is the biggest problem for VR..

To explore vaster worlds we must allow people to move virtually but not physically, via some design compromises that nevertheless minimize triggers of nausea. Some of the strategies available are:

If motion is to be used, here are some known techniques to minimize nausea:

A 1:1 mapping of physical to virtual space lets immersants wander round the available space in a physical room, which might be enough for some applications.

Still, walking around a world is really counter intuitive, as you may have to think about two different spaces simultaneously -- the space you can physically move around in, and the space you can navigate around in. It is all to easy to walk into a wall, step on a cat, etc.

Redirected walking

The notion here is that while walking in the real space, the virtual world is slightly rotated (below perceptual levels). Although we feel we are walking in a straight line in the virtual space, we are in fact walking in circles in the real world. Problem: still requires much larger spaces than most rooms.

A related method is 1:X motion, in which moving 1 meter in the real world may move you more than 1 meter in the virtual space. Horizontal exaggeration appears to not induce nausea, but vertical movement should remain 1:1.

Another technique, using eye-tracking, is to insert small camera rotations during natural saccades (our visual system suppresses visual input during a saccade).

One of the widest-used strategies to allow exploration without inducing cue-conflicts, despite being relatively immersion-breaking. It depends on developing a method to identify valid locations to teleport to, super-imposed onto the world (a kind of AR in VR).

It has been argued that we can handle teleports in VR in a similar way that we can handle cuts in TV

It can also become a game mechanic:

Many developers have suggested a 3rd person (behind the avatar) viewpoint reduces the nausea. Oculus bundled a 3rd-person platformer ("Lucky's Tale") with the first release.

A rather more unusual mode of navigation switches into 3rd person while moving, and back to 1st person when stationary.

Remember that “acceleration” does not just mean speeding up while going forward; it refers to any change in the motion of the user. Slowing down or stopping, turning while moving or standing still, and stepping or getting pushed sideways are all forms of acceleration. - Best practices, Oculus

Instant accelerations are better than smoothing

Sim sickness is much less prevalent when the field of view is lesser, however this also reduces immersion & presence. Some suggest reducing FOV only in those moments that could be particularly nauseating. Others have suggested a kind of small FOV preview overlay while moving, that expands out to full screen when movement ends.

Reducing the field of view may work because it reduces vection.

Placing a reference frame around the point of view can help stabilize the senses -- which is why cockpit-based simulations (inside cars, spaceships, robots, or even just a helmet, etc.) can handle much greater accelerations and rotations without inducing sickness. It might be as simple as having a reference that says which way is "body-forward", but it also taps into the reduced field of view/vection as above.

However it might be possible that the reference frame is semi-transparent, and even that it is not present for much of the time. -- more research is needed. See also the "canvas mode" here

Disturbance is reduced if some body actions accompany a movement. Some games use a 'running in place' or 'paddling with the hands' behaviour to trigger walking in the virtual space:

Or swimming etc.:

Or grappling hooks:

Other experiences use the direction of the hands or fingers to indicate direction of motion, which appears to reduce nausea.

Overview of locomotion methods:

Many of these solutions are utilized in EagleFlightVR, which has had very strong reviews commenting about the lack of nausea.

It is helpful to think of the HMD as the camera into a virtual world that is aligned to the real world. (At Weird Reality, I heard several speakers described the HMD as a 'head-mounted camera').

The rendered image must correspond directly with the user's physical movements; do not manipulate the gain of the virtual camera’s movements. - Best practices, Oculus

The golden rule for designers is that we must never take away control of the camera from the viewer, not even for a moment. This means no fixed-view cut-scenes or 'cinematics', no full-screen imagery, no lens and framing control, etc. Also no motion blur, depth of field effects etc. (still takes away viewer control).

One of the big challenges with VR storytelling lies within the constraints on camera movement forced upon us by this tiny detail called simulator sickness. Quick zoom in to focus on a detail – nope, not possible, you can’t zoom in VR. Nice dolly shot moving around the scene – be careful or the viewer might have a look at what he had for breakfast instead of comfortably watching your experience... the safest bet is not having continuous camera movement at all. - The limbo method

Since the immersant is free to look in any direction they choose, you need to make sure all directions are valid, potentially valuable, and that nothing essential will be missed because 'they were looking the wrong way'.

One of the most disturbing camera motions of all is the oscillating 'head bob' and other 'camera shake' effects often added to games. (The head-bob in particular is right around a 3-5Hz frequency that is particularly nauseous.)

Our inner ear detects various changes in velocity, or accelerations, but it doesn’t detect constant velocity. Because of this, developers can have someone moving at a constant speed in a relatively straight line and the simulator sickness effects will be greatly reduced. - 5 ways to reduce motion sickness in VR

There are plenty of examples of VR projects that also utilize moving cameras. Generally the fixed component of the camera motion is slow, at constant speed, in a straight line in the world, or only in the direction the person is facing. This is the kind of "rails" experience that has been disappointing to many, and still nauseous to some.

Avoid visuals that upset the user’s sense of stability in their environment. Rotating or moving the horizon line or other large components of the user’s environment in conflict with the user’s real-world self-motion (or lack thereof) can be discomforting. - Best practices, Oculus

The head-height above ground should be consistent with the immersant's own height, whether sitting or standing.

Real-world movement is more comfortable. Humans walk at ~1.4 meters per second (this is much slower than 'walking' in most video games).

Objects drawn from the real-world should have consistent and usually accurate scale.

On the other hand, miniature worlds work well -- about table-sized + 3rd person view

Avoid confined spaces.

We cannot focus on objects closer than ~5cm, for some people as much as 20cm, so it is good to avoid placing virtual content too close to the head. And in general it is disturbing for objects to intersect the body (whether a virtual body exists or not). Static objects are usually fine as most people will naturally move around them, but dynamic objects may need to be aware of where the person is.

Everything should be in-world. Nothing should "stick" to the viewer's headset -- not even messages/menus, head-up displays, etc.

Maintain VR immersion from start to finish—don’t affix an image in front of the user (such as a full-field splash screen that does not respond to head movements), as this can be disorienting... Even in menus, when the game is paused, or during cutscenes, users should be able to look around. - Best practices, Oculus

User interface elements are uncomfortable if they are stuck to the headset, better if they are transparent overlays that keep the world's orientation, and best if they are actually objects in the world. They could be:

It is also disturbing to be suddenly (unexpectedly) moved in the world because of collisions with objects or other dynamic impacts. However if collisions do not stop camera motion, people will be able to simply walk through walls and poke their heads inside of objects in the world, and float rather than fall, etc.

Vertigo is a real phenomena in VR. The 'walking on a plank over an abyss' test is one of the most tried and tested ways of evoking bodily responses using VR -- it can literally bring people to tears. Claustrophobia can also be readily evoked.

More generally, fear/shock-inducing content is much more powerful in VR, as it can approach the body and trigger physiological responses in a way that other screen-based media cannot. For example, too much action of flying bullets, explosions, moving vehicles etc. around the user can be distressing, where it would be quite acceptable in a screen-based film/game. For good reason a lot of early VR experiences are in the horror genre.

VR is an immersive medium. It creates the sensation of being entirely transported into a virtual (or real, but digitally reproduced) three-dimensional world, and it can provide a far more visceral experience than screen-based media. Enabling the mind’s continual suspension of disbelief requires particular attention to detail... - Best practices, Oculus

The closer we get to experiences we have every day (e.g. walking), the higher the risk of creating perceptual cues that do not match reality. This may be related to the uncanny valley. Characters not looking at you / not responding to you properly can be particularly disturbing.

More abstract worlds are less likely to cause such conflicts; non-photorealistic environments in many ways have advantages. Overly realistic environments can also confuse immersants -- who may begin to expect that everything in the environment can be interacted with, and be disappointed when it isn't.

Alternatively, let all things be interactive:

Many people report it disturbing to look down and see no body, especially for sedentary experiences. This may be related to giving a reference frame that has a logical anchor in the world. However, some say that looking down and seeing somebody else's body is equally disturbing, and others have shown that even a reference frame with no ontological sense can help. More research needed!

A virtual avatar ... can increase immersion and help ground the user in the VR experience, when contrasted to representing the player as a disembodied entity. On the other hand, discrepancies between what the user’s real-world and virtual bodies are doing can lead to unusual sensations (for example, looking down and seeing a walking avatar body while the user is sitting still in a chair). - Best practices, Oculus

A non-realistic body might be better than a pseudo-realistic body. Perhaps it need not even be human (or humanoid). This removes issues of mismatch size, gender, skin color, age, etc that could create cognitive dissonance. Alternatively, give immersants control over their avatar appearance.

When it comes to modeling player avatars in VR, abstract trumps the real. Malaika says Valve has found that players tend to feel less immersed in games that try to model hands realistically, and more immersed in games with cartoony hands. - Valve advice for VR

People will typically move their heads/bodies if they have to shift their gaze and hold it on a point farther than 15-20° of visual angle away from where they are currently looking. Avoid forcing the user to make such large shifts to prevent muscle fatigue and discomfort. - Best practices, Oculus

Keep most content at a comfortable viewing angle. It is uncomfortable to look up or down for very long, or to twist sideways frequently or for sustained time.

Don’t require the user to swivel their eyes in their sockets to see the UI. Ideally, your UI should fit inside the middle 1/3rd of the user’s viewing area; otherwise, they should be able to examine it with head movements. - Best practices, Oculus

And if you expect people to sit through the experience, remember that they will only rarely (if at all) see things behind them.

Refrain from using any high-contrast flashing or alternating colors that change with a frequency in the 1-30 hz range. This can trigger seizures in individuals with photosensitive epilepsy. - Best practices, Oculus

The images presented to each eye should differ only in terms of viewpoint; post-processing effects (e.g., light distortion, bloom) must be applied to both eyes consistently as well as rendered in z-depth correctly to create a properly fused image. - Best practices, Oculus

Hardly a solution, but there are a few techniques that people susceptible to sim sickness can make use of:

When a land-lubber steps onto a boat for the first time, often the rocking and variations in vestibular motion from the ocean causes a feeling of ‘sea-sickness’ that is not too different from simulator sickness. However, for most people, after a few hours or days that feeling typically dissipates as they get what is commonly referred to as their ‘sea legs.’ It is something that experienced seamen are very well adapted to. It is also something, I would argue, that replicates itself in VR. - 5 ways to reduce motion sickness in VR

A popular household remedy in Asia is rub eucalypti leaves together and inhale the scent produced from them. - Sim Sickness guide on Oculus forums

See also:

(Elements borrowed from Kevin Burke's guide, Simulator Sickness)

Tips from a team who ported a base-jumping game to VR

See the Simulator Sickness questionnaire